This BERT model is based on Habana’s existing BERT model, just scaled up to a larger size. The focus on this example is pre-training on the Wikipedia dataset. Once trained, the model can be fine-tuned on different datasets for multiple types of tasks such as question and answering, translation, or text generation.

This specific model is based on the standard BERT architecture and contains 48 main layers, 1,600 hidden layers and 25 attention heads. The combination of these parameters results in a 1.5 billion parameter model.

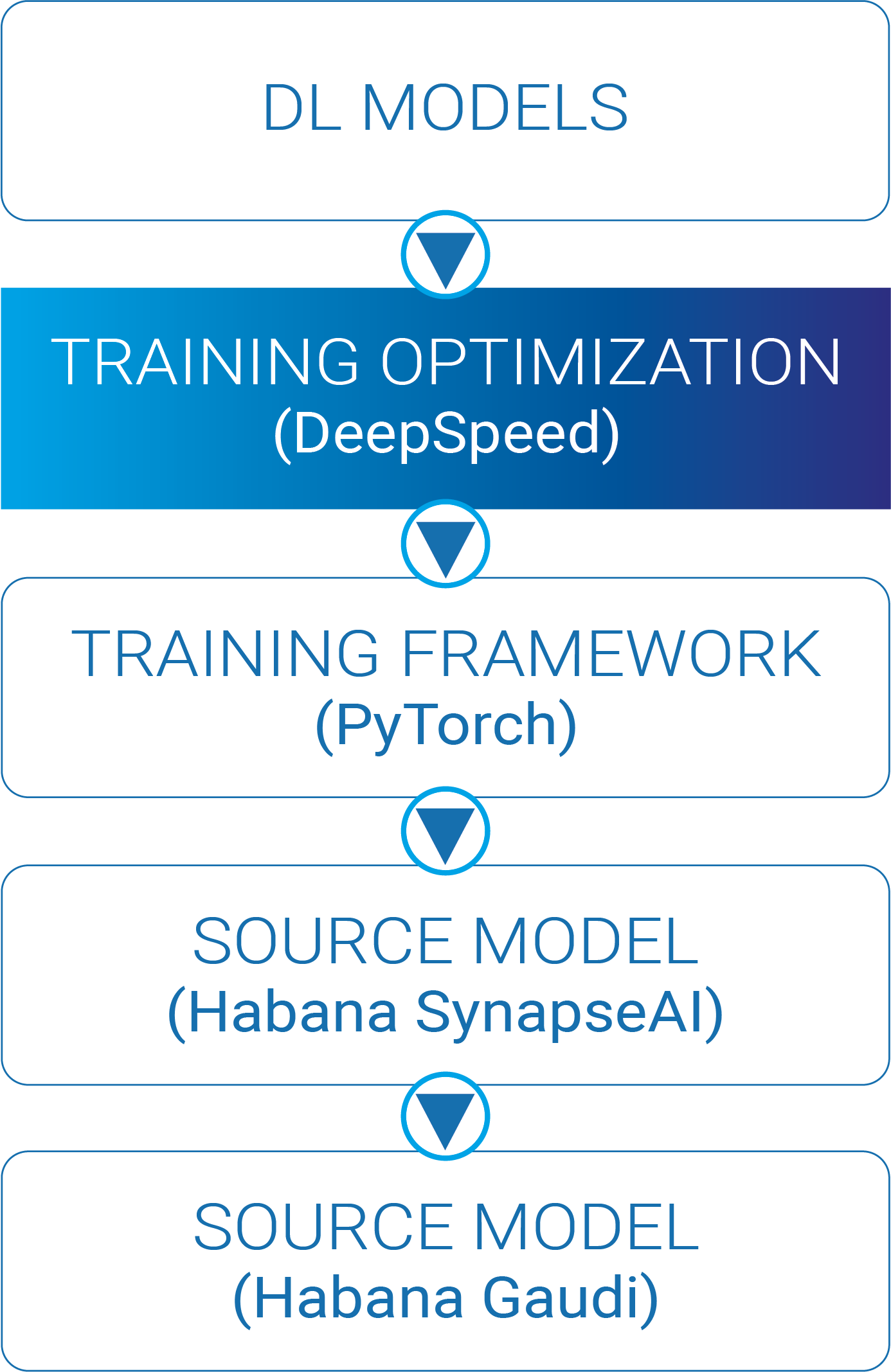

Models at this size with more and more parameters may no longer fit in the device’s memory (causing out of memory errors). In this example, we are using Habana’s fork of the DeepSpeed library to optimize system parameters, including optimizer states and gradients to ensure that the model can execute on Gaudi for the best performance.

DeepSpeed is an open-source deep learning optimization library for PyTorch that is designed to reduce computing power and memory use and to train large, distributed models with better parallelism. DeepSpeed includes the Zero Redundancy Optimizer (ZeRO) for training models. The details of the ZeRO optimizer will be discussed later in this document. The DeepSpeed library is implemented between the user’s model and the PyTorch framework, so minimal changes are needed to the existing PyTorch model. For more information on how Habana uses the ZeRO optimizer, you can refer to our previous blog on Memory-Efficient training here.

You can follow these simple steps to get up and running on first-gen Gaudi® or Gaudi®2. The first step is to set up the environment, which includes setting up an instance of Gaudi devices, the SynapseAI Software stack, the additional software requirements including Habana’s version of the DeepSpeed library, and then downloading the appropriate dataset for training. The second step is to run the model itself.

Set Up the Environment

Set up your cloud computing environment to get access to the Gaudi accelerator. There are two options available in the cloud today:

- Amazon EC2 DL1 Instances: based on first-gen Gaudi

- Users can refer to Habana’s quick start guide here for instructions on how to start a DL1 instance; an AWS user account is required.

- Intel AI Cloud using Gaudi2

- Instructions are provided on the Developer page; a user account will need to be created.

Both instances have eight Gaudi devices for use. Users must ensure that they have ~1TB of storage in the instance to be able to download the dataset and have space for execution. The download of this dataset may take several hours to execute. It is recommended to select Ubuntu20.04 as the base OS and include the Habana full SynapseAI Software stack and drivers in the image. Once you have set up the environment, install the PyTorch Docker image from Habana’s Vault by following the instructions below. See the Habana Installation Guide here for more details.

docker pull vault.habana.ai/gaudi-docker/1.7.1/ubuntu20.04/habanalabs/pytorch-installer-1.13.0:latest~$ docker pull vault.habana.ai/gaudi-docker/1.7.1/ubuntu20.04/habanalabs/pytorch-installer-1.13.0:latest

latest: Pulling from gaudi-docker/1.7.1/ubuntu20.04/habanalabs/pytorch-installer-1.13.0

846c0b181fff: Pull complete

6ae2e14c2539: Downloading [=====> ] 24.85MB/234.5MB

3fe89580045c: Download complete

1ac28d0b180f: Download complete

c993c6ef6fe8: Downloading [==============================================> ] 27.71MB/30.02MB

6d0e3696a459: Downloading [==============================> ] 2.105MB/3.479MB

05d721556cd2: Waiting

a39276db8326: Pulling fs layer

930126633dfb: Waiting

---

ba5ed4a169e5:Download compelte

Code language: PHP (php)docker run -it --runtime=habana -e HABANA_VISIBLE_DEVICES=all \

-e OMPI_MCA_btl_vader_single_copy_mechanism=none --cap-add=sys_nice --net=host --ipc=host \

vault.habana.ai/gaudi-docker/1.7.1/ubuntu20.04/habanalabs/pytorch-installer-1.13.0:latestNext, clone Habana’s Model-References repository to get access to the BERT 1.5B model and install the associated software to run the model.

git clone -b 1.7.1 https://github.com/HabanaAI/Model-References

export PYTHONPATH=/root/Model-References:$PYTHONPATH

export PYTHON=/usr/bin/python3.8

cd /root/Model-References/PyTorch/nlp/pretraining/deepspeed-bert/

pip install -r ./requirements.txtroot@ubuntu2004:~# cd ~

root@ubuntu2004:~# git clone -b 1.7.1 https://github.com/HabanaAI/Model-References

Cloning into 'Model-References'...

remote: Enumerating objects: 15256, done.

remote: Counting objects: 100% (15255/15255), done.

remote: Compressing objects: 100% (6660/6660), done.

remote: Total 15256 (delta 8238), reused 15132 (delta 8146), pack-reused 1

Receiving objects: 100% (15256/15256), 101.59 MiB | 8.08 MiB/s, done.

Resolving deltas: 100% (8238/8238), done.

Code language: PHP (php)Then install Habana’s DeepSpeed fork that includes additional optimizations for performance and functionality on the Gaudi HPU.

pip install git+https://github.com/HabanaAI/[email protected]root@ubuntu2004:~/Model-References/PyTorch/nlp/pretraining/deepspeed-bert# pip install git+https://github.com/HabanaAI/[email protected]

Collecting git+https://github.com/HabanaAI/[email protected]

Building wheels for collected packages: deepspeed

Building wheel for deepspeed (setup.py) ... done

Created wheel for deepspeed:

Successfully installed deepspeed-0.7.0+309ca18 hjson-3.1.0 psutil-5.9.4 py-cpuinfo-9.0.0 pydantic-1.10.4

Code language: PHP (php)Finally, download and prepare the dataset for training. The script below will download the Wikipedia dataset as a starting point for running the model. The download of the Wikipedia dataset will require over 600GB of storage, so ensure that the cloud computing instance you select is created with ~1TB of storage to allow download of the dataset and runtime execution. Our model has a simple script that will download the dataset, establish some baseline weights in the model (instead of starting from a set of random values), format and shard the text files, and download the .txt files needed for training.

cd ./data

bash create_datasets_from_start.shroot@ubuntu2004:~/Model-References/PyTorch/nlp/pretraining/deepspeed-bert/data# ./create_datasets_from_start.sh

Checkout WikiExtractor repository

Cloning into 'wikiextractor'...

remote: Enumerating objects: 771, done.

remote: Counting objects: 100% (30/30), done.

remote: Compressing objects: 100% (16/16), done.

remote: Total 771 (delta 17), reused 24 (delta 14), pack-reused 741

Receiving objects: 100% (771/771), 1.31 MiB | 3.11 MiB/s, done.

Resolving deltas: 100% (450/450), done.

---

Downloading: https://dumps.wikimedia.org/enwiki/latest/enwiki-latest-pages-articles.xml.bz2

Running: ['wget', 'https://dumps.wikimedia.org/enwiki/latest/enwiki-latest-pages-articles.xml.bz2', '--output-document=/root/Model-References/PyTorch/nlp/pretraining/deepspeed-bert/data/download/wikicorpus_en/wikicorpus_en.xml.bz2']

https://dumps.wikimedia.org/enwiki/latest/enwiki-latest-pages-articles.xml.bz2

Resolving proxy-us.intel.com (proxy-us.intel.com)... 10.1.192.48

Connecting to proxy-us.intel.com (proxy-us.intel.com)|10.1.192.48|:912... connected.

Proxy request sent, awaiting response... 200 OK

Length: 20580559456 (19G) [application/octet-stream]

Saving to: ‘/root/Model-References/PyTorch/nlp/pretraining/deepspeed-bert/data/download/wikicorpus_en/wikicorpus_en.xml.bz2’

pretraining/deepspeed-bert/data/download/ 17%[----------------> ] 3.35G 4.23MB/s eta 55m 10s

Code language: PHP (php)Running the Model

Now that the environment has been set up, we can run the full pre-training. For simplicity, Habana has provided a full that executes on an 8-card example with the BERT 1.5B model. This script contains the –deepspeed run command argument and the pointer to the DeepSpeed configuration .json file. Before running this script, let’s look at some of the key changes in the model and review what the DeepSpeed engine is doing with the model.

What is DeepSpeed and ZeRO

The DeepSpeed library itself is an optimization library that is used to manage model gradients, optimizer states and the distribution of the workload across the Gaudi accelerators, and manages the system memory. The library abstracts the difficult aspects of large-scale training, such as parallelization, mixed precision, gradient accumulation; taking advantage of the ZeRO Optimizer.

Using ZeRO in a DeepSpeed model is quick and easy; all that is needed to change a few configurations is the DeepSpeed configuration JSON. No model code changes are needed to enable the benefits of ZeRO on a model.

Setting up a model for DeepSpeed

There are several steps for enabling a model for DeepSpeed, in Habana’s BERT1.5B model. For more details on how to convert a model to use the DeepSpeed library, you can refer to the DeepSpeed getting started here. First, initialize the DeepSpeed model execution and distribution. This is done by calling the deepspeed.initialize() function and the deepspeed.init_distributed() function. Examples from the BERT1.5B are shown below:

model, optimizer, _, lr_scheduler = deepspeed.initialize(

args=args,

model_parameters=None if optimizer else optimizer_grouped_parameters,

model=model,

optimizer=optimizer,

lr_scheduler=lr_scheduler

An important part of the init_distributed function is that the dist_backend variable is set to “hccl”, this is using Habana’s collective communications library (hccl). This function replaces the original use of the PyTorch DistributedDataParallel() function call in a non-DeepSpeed model.

if args.use_hpu:

import habana_frameworks.torch.hpu

import habana_frameworks.torch.distributed.hccl

device = torch.device("hpu")

dist_backend = "hccl"

deepspeed.init_distributed(dist_backend=dist_backend, init_method=init_method)

Additionally, the traditional backward training pass function is changed from loss.backward() to model.backward(loss) and the Optimizer is changed from optimizer.step() to model.step(); taking advantage of the optimizer built into the DeepSpeed Engine. For the BERT1.5B the LANS optimizer is used.

To manage the runtime of the DeepSpeed model, there are two new files that are also used to set the parameters of the execution:

ds_config.json – the file that sets all the config parameters for the DeepSpeed execution hyperparameters, ZeRO state, and other DeepSpeed specific runtime variables. For Habana’s BERT1.5B model, this file is deepspeed_config_bert_1.5b.json and sets the ZeRO state to use ZeRO Stage 1, sets the mixed precision to use BF16, and other variables

run_deepspeed.sh – the run script that calls the model, points to the deepspeed_config_bert_1.5b.json and initiates the run on Habana by setting the –use_hpu option in the run commands. For Habana’s BERT1.5B model, this file is called run_bert_1.5b_8x.sh. This script calls the run_pretraining.py script.

Running Habana’s BERT1.5B Model:

Once the initial steps have been completed, you can call the run script:

bash ./scripts/run_bert_1.5b_8x.shThis will execute the model on eight Gaudi HPUs and create a set of checkpoints for use in the Fine-Tuning steps later.

Beginning of run script:

root@ubuntu2004:~/Model-References/PyTorch/nlp/pretraining/deepspeed-bert# bash ./scripts/run_bert_1.5b_8x.sh

[runner.py:517:main] cmd = /usr/bin/python3 -u -m deepspeed.launcher.launch --world_info=eyJsb2NhbGhvc3QiOiBbMCwgMSwgMiwgMywgNCwgNSwgNiwgN119 --master_addr=127.0.0.1 --master_port=29500 --no_python --no_local_rank python -u ./run_pretraining.py --use_hpu --disable_progress_bar --optimizer=lans --use_lr_scheduler --resume_from_checkpoint --do_train --bert_model=bert-base-uncased --config_file=./scripts/bert_1.5b_config.json --json-summary=./results/bert_1.5b/dllogger.json --output_dir=./results/bert_1.5b/checkpoints --seed=12439 --input_dir=/data/pytorch/bert_pretraining/hdf5_lower_case_1_seq_len_128_max_pred_20_masked_lm_prob_0.15_random_seed_12345_dupe_factor_5/books_wiki_en_corpus --max_seq_length 128 --max_predictions_per_seq=20 --max_steps=155000 --steps_this_run=-1 --num_steps_per_checkpoint=200 --learning_rate=0.0015 --warmup_proportion=0.05 --constant_proportion=0.25 --scheduler_degree=1.0 --log_freq=10 --deepspeed --deepspeed_config=./scripts/deepspeed_config_bert_1.5b.json

[INFO] [launch.py:139:main] WORLD INFO DICT: {'localhost': [0, 1, 2, 3, 4, 5, 6, 7]}

[INFO] [launch.py:145:main] nnodes=1, num_local_procs=8, node_rank=0

[INFO] [launch.py:158:main] global_rank_mapping=defaultdict(<class 'list'>, {'localhost': [0, 1, 2, 3, 4, 5, 6, 7]})

[INFO] [launch.py:159:main] dist_world_size=8

Distributed training with backend=hccl, device=hpu, local_rank=3

Distributed training with backend=hccl, device=hpu, local_rank=5

Distributed training with backend=hccl, device=hpu, local_rank=7

Distributed training with backend=hccl, device=hpu, local_rank=1

Distributed training with backend=hccl, device=hpu, local_rank=0

Distributed training with backend=hccl, device=hpu, local_rank=2

Distributed training with backend=hccl, device=hpu, local_rank=4

Distributed training with backend=hccl, device=hpu, local_rank=6

[INFO] [comm.py:628:init_distributed] Initializing TorchBackend in DeepSpeed with backend hccl

Using LANS

Using PolyWarmUpScheduler with args={'warmup': 0.05, 'total_steps': 155000.0, 'degree': 1.0, 'constant': 0.25}

Code language: PHP (php)End of run script:

[INFO] [engine.py:3268:_save_zero_checkpoint] zero checkpoint saved deepspeed-run/1.7.1-85/bert_1.5b/8/2023-01-04_17-52/checkpoints/20/bf16_zero_pp_rank_0_mp_rank_00_optim_states.pt

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

[INFO] [torch_checkpoint_engine.py:27:commit] [Torch] Checkpoint 20 is ready now!

DLL 2023-01-04 18:43:28.908788 - e2e_train_time : 2313.8388447761536 training_sequences_per_second : 63.06757577335175 final_loss : 11.55532455444336 raw_train_time : 1948.3862903118134

[INFO] [launch.py:322:main] Process 12605 exits successfully.

[INFO] [launch.py:322:main] Process 12609 exits successfully.

[INFO] [launch.py:322:main] Process 12608 exits successfully.

[INFO] [launch.py:322:main] Process 12610 exits successfully.

[INFO] [launch.py:322:main] Process 12607 exits successfully.

[INFO] [launch.py:322:main] Process 12606 exits successfully.

[INFO] [launch.py:322:main] Process 12604 exits successfully.

[INFO] [launch.py:322:main] Process 12603 exits successfully.

/usr/local/lib/python3.8/dist-packages/habana_frameworks/torch/core/__init__.py:94: UserWarning: habana_frameworks.torch.core.get_device_count is deprecated. Please use habana_frameworks.torch.hpu.device_count

warnings.warn("habana_frameworks.torch.core.get_device_count is deprecated. "

LOG_FILE = /deepspeed-bert_1.5b-8-p1-2023-01-04_17-52.log

TOPOLOGY = pretraining

BATCH_SIZE =

WORLD_SIZE = 8

Pretraining phase1 results

8 card(s) average sentences per second:302.5176

average dps: 37.8147

Code language: PHP (php)Results

Below is the throughput as well as the overall memory consumption for the BERT 1.5B param model running on 8, 16, 32, 64 and 128 devices. If the ZeRO optimizer was not used, the model would not fit in Gaudi’s memory and cause an OOM (out of memory) error.

Next Steps

You are invited to experiment with several options for running DeepSpeed based models on Gaudi. You can use one of the following examples:

- A simple CIFAR based model using DeepSpeed to show how to get started with Habana Model References GitHub here

- A DeepSpeed Tutorial with a configurable model to change the size and experiment with the effect of ZeRO Stage 1 and Stage 2 and Activation Checkpointing here

- The model from this paper: BERT 1.5B on 8 Gaudi and 5B on 128 Gaudi here

- HuggingFace GPT2 example here