This post will provide an example of implementing the LLaMA model with Megatron-DeepSpeed.

Understanding your model

To make things easier, it is important to understand which existing model is the closest to our new model and to map the differences between them. In the case of LLaMA, the model is similar to GPT and we can use the latter as a basis. We should also note we may need to use a different tokenizer than GPT, depending on our needs and data.

The main differences between LLaMA model family and GPT model family include:

- Using different activation functions

- Different positional embeddings

- Different layer normalizations

- LLaMA model also includes additional layer normalization after all transformer layers

- LLaMA uses different weights for embedding layers at the beginning and end of the model

- The projected hidden size used in the MLP layers in LLaMA is smaller than GPT’s

- LLaMA does not use bias in linear layers

Implementing your model

All models in Megatron-DeepSpeed have two unique files: x_model.py (e.g. gpt_model.py) where the model architecture is defined, and pretrain_x.py to handle details for training (e.g. argument management, auxiliary functions, etc.).

When implementing a new model, you might need additional arguments or some modifications to existing ones. New arguments will generally be added to the arguments.py file. However, there may be some new arguments that are unique to your model and in that case, you can add them using the ‘extra args provider’ in pretrain_x.py without adding them to the general arguments.py, which are used by all models.

You may also need to set new default values matching your model setting to existing arguments. For that purpose, Megatron-DeepSpeed passes the ‘args defaults’ dictionary when calling the pretrain function.

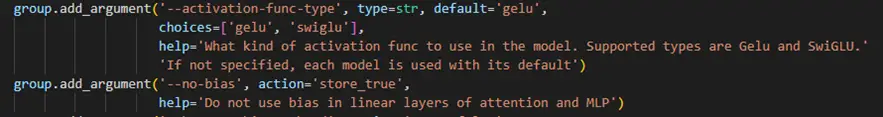

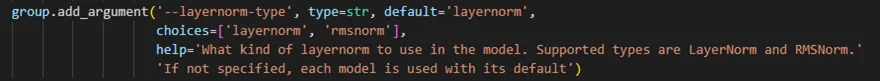

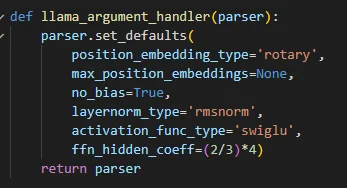

For LLaMA, we will need to add RMSNorm (to replace layer normalization) and the SwiGLU activation function. To support all options (including the existing layernorm and gelu activation function used in other models), we add new arguments ‘layernorm-type’ and ‘activation-func-type’ to arguments.py. We will also add the ‘no-bias’ since LLaMA uses linear layers without bias. LLaMA uses rotary positional embeddings (aka RoPE), but as the code already supports different types of positional embeddings, we just add ‘rotary’ as an additional option to the existing ‘position-embedding-type’ argument. We also use the extra args provider to set all required defaults to match LLaMA:

Our new llama_model.py will be very similar to gpt_model.py. You should consider the flow you wish to use. Keep in mind that although Megatron-DeepSpeed supports both pipe or non-pipe module, Habana supports the pipe module only (even when not using pipeline parallelism).

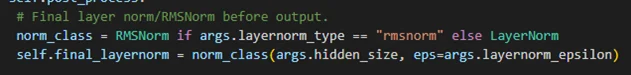

The forward function of the model is defined under the LLaMAModel class in this file. Some of the changes we apply are in other classes, like replacing LayerNorm layers to RMSNorm according to the relevant argument in ParallelTransformer:

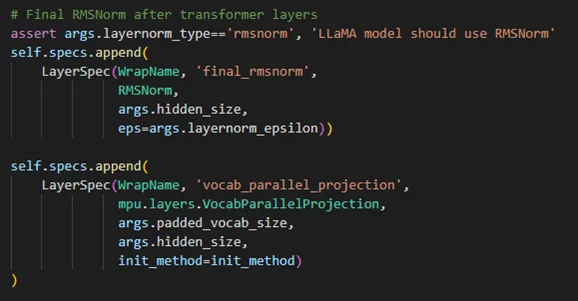

Pipe module is defined as a different class (LLaMAModelPipe vs LLaMAModel). The pipe module uses DeepSpeed’s pipe engine allowing the use of model parallelism (tensor and pipeline). For the pipe module, we define the sequence of all layers using LayerSpec and TiedLayerSpec for untied/tied layers. Tied layers are used to handle the weight sharing between layers, e.g., for the embedding layers in GPT. In LLaMA we don’t use tied layers for the embeddings and define the ‘vocab parallel projection’ as the last embedding layer.

Adding new layer

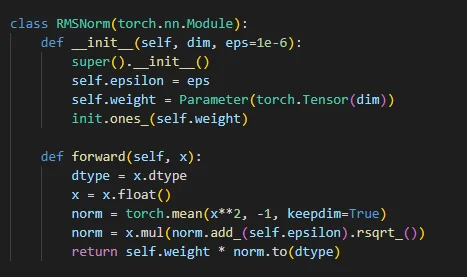

Some of the layers of your new model might not be implemented already. For example, for LLaMA we need to add RMSNorm implementation. In addition to replacing all layer normalization to RMSnorm layers, we add rmsnorm.py in megatron/model.

Modifying existing implementation

You may also need to insert modifications to the existing implementation. For example, to use the SwiGLU activation function, we edit the forward function itself in transformer.py: