Multi-node scale-out of Gaudi accelerator devices via host Network Interface Controller (NIC) traditionally requires memory copying from the device to the host CPU and across the network to other devices. This increases CPU overhead, causes latency bottlenecks and results in lower training performance. The performance issue can become more apparent when scaling up to larger models and larger number of devices that require more forms of network communication, impacting overall model training.

To resolve the additional overhead, we collaborated with AWS to enable Elastic Fabric Adapter (EFA) Peer Direct for multi-node communication on AWS EC2 DL1 instances. EFA is an Elastic Network Adapter (ENA) for Amazon’s EC2 instances that supports bypassing the OS kernel to communicate directly with the NIC. Peer Direct is a process that directly reads and writes data to/from memory between devices, bypassing the CPU. This removes unnecessary memory copies, decreases CPU overhead, and reduces latency. EFA Peer Direct significantly improves performance for collective operations, such as All-Reduce and All-Gather, which are pivotal for large scale distributed training models. Support for EFA Peer Direct on DL1 instances was effective with Habana’s SynapseAI 1.8.0 release in Feburary 2023

Running EFA Peer Direct on AWS EC2 DL1 is simple and straightforward. The steps to enable EFA Peer Direct are:

- Preparing an EFA-enabled security group

- Launching DL1s on Habana Supported AMIs. Habana AMIs require no additional packages to be installed to run EFA.

- Enabling EFA NICs. DL1s support up to 4 EFA-enabled NICs.

- Setting environment variables properly in training scripts.

RDMAV_FORK_SAFE=1 FI_EFA_USE_DEVICE_RDMA=1 - Run Multi Node Training on DL1.

For more information, refer to Scale-Out via Host-NIC documentation.

Habana Collective Communication Library (HCCL) Performance on 32 Gaudi devices

Table 1 shows the performance advances using EFA Peer Direct communication. We measure the performance of All Reduce, All Gather and Reduce Scatter collective operations for 32MB Messages on 32 Gaudi devices i.e., 4 DL1 instances.

Habana Collective Communication Library (HCCL) Performance on 32 Gaudi devices

Table 1 shows the performance advances using EFA Peer Direct communication. We measure the performance of All Reduce, All Gather and Reduce Scatter collective operations for 32MB Messages on 32 Gaudi devices i.e., 4 DL1 instances.

| Collective operator | Performance using EFA networking without Peer Direct (MB/s) | Performance using EFA with Peer Direct (MB/s) | Performance Improvement with EFA Peer Direct |

|---|---|---|---|

| All-Reduce | 12110 | 21136 | 154% |

| All-Gather | 20299 | 41082 | 202% |

| Reduce-Scatter | 9249 | 17418 | 188% |

Running with EFA Peer Direct enables 1.5-2x increase in performance across the different collective operators for 32MB message sizes. Eliminating the extra steps of copying to host memory has a significant benefit and provides a beneficial performance boost during training, the degree of which is dependent on the number of collective operations performed and varies by model and framework used.

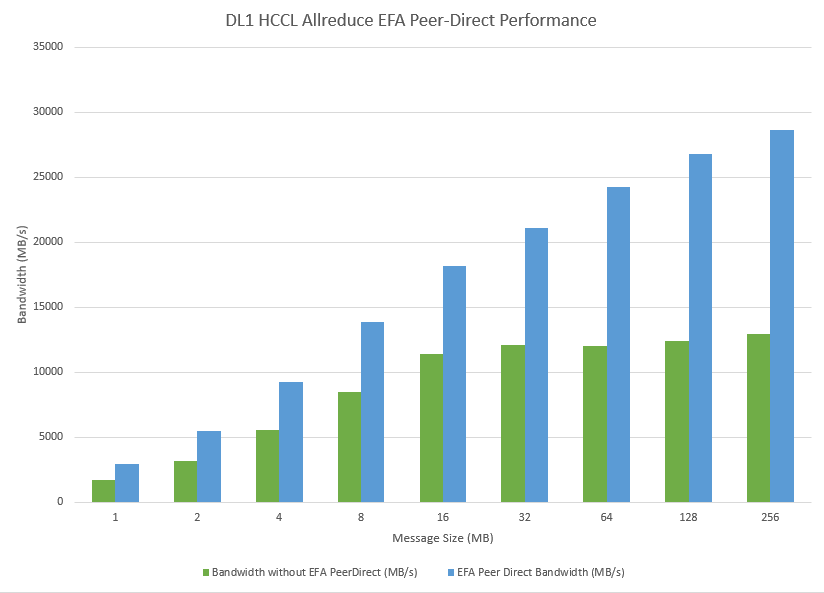

To see the impact across small and medium message sizes, we ran an additional experiment on 32 Gaudi devices with the All-Reduce operation. This provides additional insight across different scenarios as well as the scalability improvements gained through EFA Peer Direct.

As shown in Figure 1, without EFA Peer Direct, throughput plateaus around 12 MB/s for larger message sizes (primarily due to CPU overhead caused a bottleneck, hindering the overall performance.) With EFA Peer Direct, the bottleneck is removed and significant performance boost is enabled in throughput for larger message sizes (for instance, up to 1.76x for 256 MB message size.) For models with large number of collective operations such as All Reduce, EFA Peer Direct provides a significant improvement in inter-instance communications.

Accelerating Distributed Model Training with EFA Peer Direct

Next let’s look at model training performance, specifically multi-instance distributed training. Our candidate model is Habana’s DeepSpeed BERT 5B parameter model running on 16 DL1 instances (128 Gaudi HPUs). This model uses the ZeRO-2 optimizer to reduce memory consumption at the cost of increased collective operations, a perfect candidate to demonstrate the EFA Peer Direct capabilities.

We are using the model configuration shown in Table 2 and measuring performance with Habana SynapseAI version 1.8.0.

| Field | Value |

|---|---|

| # of EFA NICs | 4 |

| Model | DeepSpeed BERT 5B |

| Zero Optimization Stage | ZeRO-2 |

| Global Batch Size | 12,288 |

| Micro Batch Size (per HPU) | 24 |

| Gradient Accumulation Steps | 4 |

| Activation Checkpoint | Yes |

| Optimizer | LANS |

Table 3 shows the model throughput performance with and without Peer Direct enabled.

| Scale-Out Mode | Throughput (Samples Per Second) | Performance Improvement % |

|---|---|---|

| Without Peer Direct | 391.44 | |

| With Peer Direct | 674.73 | 153% |

We see that with EFA Peer Direct, model throughput performance is increased by ~1.5x. This improvement allows for faster training and convergence with minimal additional effort.

EFA Peer Direct on Gaudi-based Amazon EC2 DL1 instances provides significant performance improvements for multi-instance distributed training jobs. To leverage the benefits of EFA Peer Direct on DL1, follow the instructions on getting started with EFA on AWS EC2 and accessing the first-gen Gaudi on the Amazon EC2 DL1 instance.