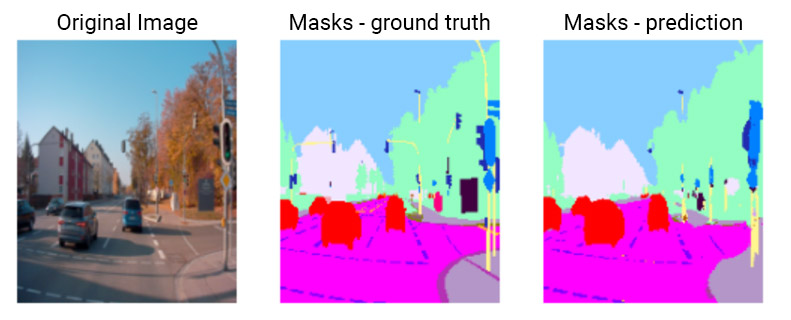

A critical part of Autonomous driving is to provide a detailed description of the vehicles surroundings. This example shows how to train a model on a Gaudi system to perform semantic segmentation on a dataset of street-based driving images. The model can be used to provide information to the autonomous driving subsystem control system, employing an algorithm that associates a label or category with every pixel in an image and is used to recognize a collection of pixels that form distinct categories. In this case, an autonomous vehicle needs to identify vehicles, pedestrians, traffic signs, pavement, and other road features. From the example below, you can see that each item category is represented by a separate color.

This example uses the nnU-Net model for semantic segmentation, a self-adapting framework based on the original U-Net 2D model, which is then optimized on Habana’s SynapseAI Software Stack. We demonstrate how to train this model from scratch using the Audi Autonomous Driving Dataset (A2D2), which features 41,280 images in 38 categories. You will see how easy and fast it is to train this model on a Gaudi system and how you can further accelerate it using multiple Gaudi accelerators. In this case, eight Gaudi accelerators are used, resulting in training of the model in only 2 hours.

This Autonomous Vehicle use case is available on the Gaudi-Solutions Github page in a Jupyter notebook. To run this use case, start with one of the two Gaudi options below. Please refer to the installation guide for more information. You can access Gaudi or Gaudi2 in two ways:

- Amazon EC2 DL1 Instances: based on first-gen Gaudi

- Users can refer to Habana’s quick start guide here for instructions on how to start a DL1 instance; an AWS user account is required.

- Users can refer to Habana’s quick start guide here for instructions on how to start a DL1 instance; an AWS user account is required.

- Intel Developer Cloud using Gaudi2

- Instructions are provided on the Developer page; a user account will need to be created.