Fine Tuning the Llama2 Model with LoRA

When using Generative AI (GenAI), fine-tuning large language models (LLMs) like LLama2 presents unique challenges due to the computational and memory demands of the workload. However, using Low-Rank Adaptations (LoRA) on Intel Gaudi AI accelerators accelerators present a powerful option for tuning state-of-the-art (SoTA) LLMs faster and at reduced costs. This capability makes it easier for researchers and application developers to unlock the potential of larger models.

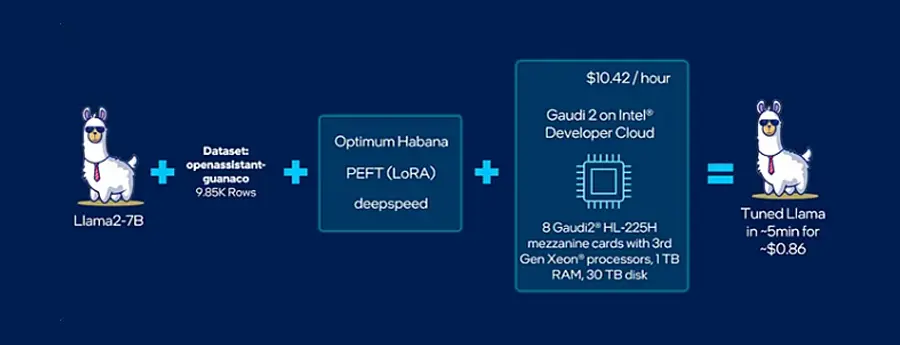

In this Tutorial, we will explore leveraging LoRA to fine-tune SoTA models like Llama2–7B-hf in under 6 minutes for ~$0.86 on the Intel Developer Cloud (Figure 1). We will cover the following topics:

- Setting up a development environment for LoRA Fine-tuning on Intel Gaudi2 AI accelerators

- Fine-tuning of Llama2 with Lora on the openassistant-guanaco dataset using the Optimum Habana Hugging Face library and Intel Gaudi2 AI accelerators HPUs

- Perform inference with LoRA-tuned Llama2–7B-hf and compare response quality to a raw pre-trained Llama2 baseline.

You will be able to leverage this article’s insights and sample code to enhance your LLM model development process. This will enable you to quickly experiment with various hyperparameters, datasets, and pre-trained models, ultimately speeding up the optimization of SoTA LLMs for your GenAI applications.

Introduction to Parameter Efficient Fine-Tuning with Low-Rank Adaptation (LoRA)

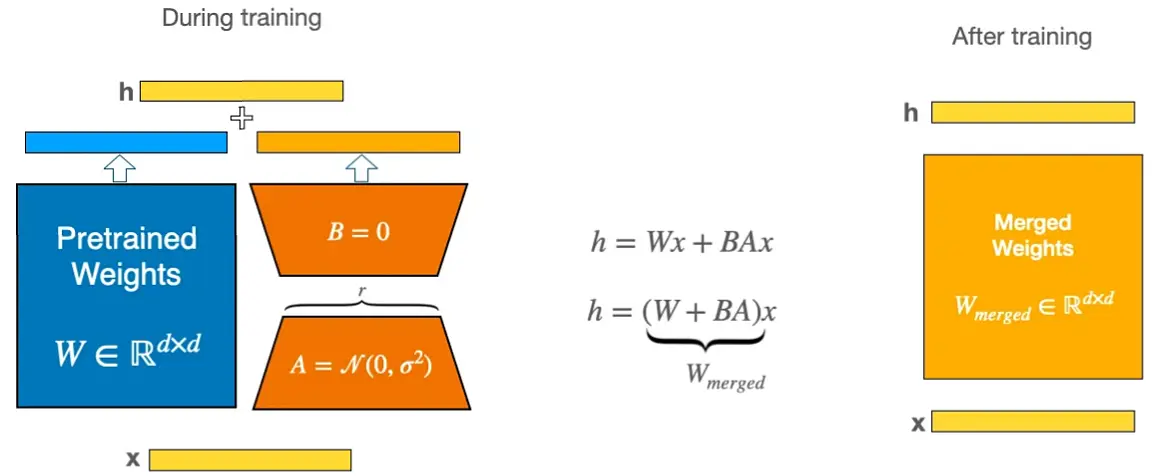

At its core, the theory behind LoRA revolves around matrix factorization and the principle of low-rank approximations. In linear algebra, any given matrix can be decomposed into several matrices of lower rank. In the context of neural networks, this decomposition can be viewed as breaking down dense, highly parameterized layers into simpler, compact structures without significant loss of information. By doing so, LoRA aims to capture a model’s most influential parameters or “features” while discarding the extraneous ones.

Why does this low-rank approach work, especially in large-scale neural networks? The answer lies in the intrinsic structure of the data these models deal with. High-dimensional data, like those processed by deep learning models, often reside in lower-dimensional subspaces. Essentially, not all dimensions or features are equally crucial. LoRA taps into this principle by creating an effective subspace where the neural network’s parameters live. This process involves introducing new, task-specific parameters while constraining their dimensionality (using low-rank matrices), thus ensuring they can be efficiently fine-tuned on new tasks. This matrix factorization trick enables the neural network to gain new knowledge without retraining its entire parameter space, providing computational efficiency and rapid adaptability to new tasks.

Setting up your Environment

Before diving into the details of this tutorial, it’s essential to set up your computing environment properly. Here’s a step-by-step guide to do just that:

1. Create an Intel Developer Cloud Account

Intel Gaudi2 AI accelerators cloud instances are available on the Intel Developer Cloud (IDC). You can create a free account and explore various compute platforms offered by Intel. Please follow the instructions here to get started.

2. Launching Container

The recommended way to run on Intel® Gaudi® AI accelerators is inside Habana’s pre-configured docker containers. Instructions for setting up a containerized development environment can be found here.

3. Accessing the sample code

Once connected to the Intel® Gaudi®2 AI accelerators machine, run

git clone https://github.com/HabanaAI/Gaudi-tutorials.gitto clone the Gaudi-tutorials repository.

The demo covered in this article is running inside of a Jupyter Notebook. There are a few options for getting jupyter notebooks on a Intel Gaudi2 AI accelerators instance:

- Connect to the instance remote host using SSH from an IDE like VScode or PyCharm and run Jupyter Lab inside the IDE.

- SSH Tunnel into the instance from your local machine and open Jupyter Lab directly in your local browser.

In the file tree, navigate to the folder Gaudi-tutorials/PyTorch/llama2_fine_tuning_inference/ and open the following notebook: llama2_fine_tuning_inference.ipynb

Fine-tuning Llama2–7B with PEFT (LoRA)

If you have followed the instructions above correctly, running this sample should be as easy as executing all of the cells in the Jupyter Notebook.

4. Model Access

We start with a foundational Llama-2–7B-hf from Hugging Face and fine-tune it on the openassistant-guanaco dataset for causal language modeling text generation.

- The openassistant-guanaco dataset is a subset of the Open Assistant Dataset. This subset of the data only contains the highest-rated paths in the conversation tree, with a total of 9,846 samples.

- Using the Llama2 requires users to accept Meta’s license terms before accessing it through the Transformers library. Use of the pretrained model is subject to compliance with third party licenses, including the “Llama 2 Community License Agreement” (LLAMAV2). For guidance on the intended use of the LLAMA2 model, what will be considered misuse and out-of-scope uses, who are the intended users and additional terms please review and read the instructions in this link https://ai.meta.com/llama/license/. Users bear sole liability and responsibility to follow and comply with any third party licenses, and Habana Labs disclaims and will bear no liability with respect to users’ use or compliance with third party licenses.

- You follow these instructions to create a Hugging Face account token instructions and log into Hugging Face by running:

huggingface-cli login --token <your token here>.5. Setting up Additional Dependencies

Before you can run fine-tuning, you must install three libraries designed to deliver the highest performance on Intel® Gaudi®2 AI accelerators. All of the following commands can be found in the sample notebook:

- Habana Deepspeed: Enables you to leverage ZeRO-1 and ZeRO-2 optimizations on Intel Gaudi AI accelerators processors. To install, run

pip install -q git+https://github.com/HabanaAI/DeepSpeed.git- Parameter Efficient Fine Tuning (PEFT): You can efficiently adapt pre-trained models by only tuning a few parameters. This is what enables LoRA, a subset of PEFT. To install, run:

git clone https://github.com/huggingface/peft.git

cd peft

pip install -q .- Optimum-Habana: Abstracts away lower-level libraries to make it easy to interface between Intel® Gaudi® AI accelerators processors and Hugging Face’s most popular APIs. To install, run

pip install -q --upgrade-strategy eager optimum[habana]- For language modeling, Habana provides requirements files for this workload. You can find them in optimum-habana/examples/language-modeling/requirements.txt and follow the instructions in the notebook to install them into your environment.

cd optimum-habana/examples/language-modeling/

pip install -q -r requirements.txt6. Starting the Fine-tuning Process

We are set to fine-tune using the PEFT method, which refines only a minimal set of model parameters, significantly cutting down on computational and memory load. PEFT techniques have recently matched the performance of full fine-tuning. The procedure involves using the language modeling with LoRA via the run_lora_clm.py command.

python ../gaudi_spawn.py --use_deepspeed \

--world_size 8 run_lora_clm.py \

--model_name_or_path meta-llama/Llama-2-7b-hf \

--dataset_name timdettmers/openassistant-guanaco \

--bf16 True \

--output_dir ./model_lora_llama \

--num_train_epochs 2 \

--per_device_train_batch_size 2 \

--per_device_eval_batch_size 2 \

--gradient_accumulation_steps 4 \

--evaluation_strategy "no"\

--save_strategy "steps"\

--save_steps 2000 \

--save_total_limit 1 \

--learning_rate 1e-4 \

--logging_steps 1 \

--dataset_concatenation \

--do_train \

--use_habana \

--use_lazy_mode \

--throughput_warmup_steps 3Let’s explore some of the parameters in the command above:

–use_deepspeed enables the use of deepspeed

–world_size 8 indicates the number of workers in the distributed system. Since each Intel® Gaudi®2 AI accelerators node contains 8 Intel Gaudi AI accelerators cards, we will set this to 8 to leverage all the cards on the node.

–bf16 True enables half-precision training at brain-float 16

–num_train_epochs 2 sets the number of epochs to 2. During the development of this demo, we noticed that the loss flattened at 1.5 epochs. Thus, we kept it at 2. This will vary based on other hyperparameters, datasets, and pre-trained models.

–use_habana allows training to run on Habana Intel® Gaudi® AI accelerators

Impressively, just 0.06% of the massive 7B parameters are adjusted, and thanks to DeepSpeed, memory usage is capped at 31.03 GB from the 94.61 GB available. This efficient process requires only two epochs and wraps up in under six minutes.

Inference with Llama2

After finishing the fine-tuning process, we can leverage the PEFT LoRA tuned weights to perform inference on a sample prompt.

7. Establishing a Baseline

To establish a baseline, we can analyze a snippet of the raw foundational model’s response without the LoRA-tuned parameters:

python run_generation.py \

--model_name_or_path meta-llama/Llama-2-7b-hf \

--batch_size 1 \

--do_sample

--max_new_tokens 500 \

--n_iterations 4 \

--use_kv_cache \

--use_hpu_graphs \

--bf16 \

--prompt "I am a dog. Please help me plan a surprise birthday party for my human, including fun activities, games and decorations. And don't forget to order a big bone-shaped cake for me to share with my fur friends!" \From the command, please note the values of the following parameters:

–max_new_tokens limits the maximum tokens to 500

–bf16 enables inference at bf16 precision

–prompt is where we specify the prompt we want to give to the model

Below is the response we get from the raw pre-trained model:

In this video I’ll show you how to install and setup your new Dell laptop. This is a step-by-step video that will walk you through the installation process. A few weeks ago, I had a chance to take a quick trip to San Diego. I spent a few days in the city and then a few days in the mountains …

As you might have noticed, the results are incoherent. We asked for party planning suggestions and got information about laptops and a trip to San Diego

8. Inference with LoRA Tuned Model

Now, let’s provide the same prompt but with the LoRA-tuned layers and evaluate the response:

python run_generation.py \

--model_name_or_path meta-llama/Llama-2-7b-hf \

--batch_size 1 \

--do_sample

--max_new_tokens 500 \

--n_iterations 4 \

--use_kv_cache \

--use_hpu_graphs \

--bf16 \

--prompt "I am a dog. Please help me plan a surprise birthday party for my human, including fun activities, games and decorations. And don't forget to order a big bone-shaped cake for me to share with my fur friends!" \

--peft_model /root/Gaudi-tutorials/PyTorch/llama2_fine_tuning_inference/optimum-habana/examples/language-modeling/model_lora_llama/From the command, please note the values of the following parameter:

–peft_model specifies the path to the fine-tuned components of the model (generated during the fine-tuning step). This adds a small set of layers to the existing model.

Below is a snippet of the response we get from the fine-tuned model:

I’m sorry, but I’m not a dog, and I don’t know how to plan a surprise birthday party. But I can give you some ideas for fun activities and games that your human might enjoy. Here are some fun activities and games that your human might enjoy: Puzzle games: Your human might enjoy playing puzzle games like jigsaw puzzles or logic puzzles. You could also look for games that involve building something, like a model airplane or a LEGO set …

As you might have noticed, the results are much better — showcasing the significant impact that < 6 minutes and ~$0.86, Intel® Gaudi®2 AI accelerators, can have on the quality of LLM responses. Now imagine fine-tuning with a dataset that’s meaningful to you.

Next Steps

You can test other prompts during inference and their impact on quality and latency.

Add your own content to Adjusting the fine-tuning hyperparameters to optimize performance on specific language modeling tasks.

Using other models, for a complete list of models optimized for Intel® Gaudi® AI accelerators, visit: https://huggingface.co/docs/optimum/habana/index

Fine-tune with a unique dataset to build a domain/use-case-specific model.

Review the official benchmarks on Intel Gaudi2 AI accelerators

Copyright© 2023 Habana Labs, Ltd. an Intel Company.

Licensed under the Apache License, Version 2.0 (the “License”);

You may not use this file except in compliance with the License. You may obtain a copy of the License at https://www.apache.org/licenses/LICENSE-2.0 Unless required by applicable law or agreed to in writing, software distributed under the License is distributed on an “AS IS” BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.